In particular I look at dm-crypt partitions, containers, truecrypt, ecryptfs and encfs with different parameters. This question has not garnered much interest, so this will likely be the last entry unless someone specifically asks a question.I want to compare different encryption solutions for encrypting my system, possibly different solutions for different parts of the system such as /usr or /home. See question for more details and conclusions. Memory was the major bottleneck for this CPU. Once tuned, I will report back with the final numbers for comparison. So there is some hope for better performance to come.

#Benchmark cpu linux how to#

That will take a moment with the manual to determine how to install the memory to enable this setting.Ī test build with some different software took more than 20% off the build time with the better X.M.P. The other issue is setting dual channel memory access vs. There seems to be only one profile available in this case besides the default setting.

#Benchmark cpu linux full#

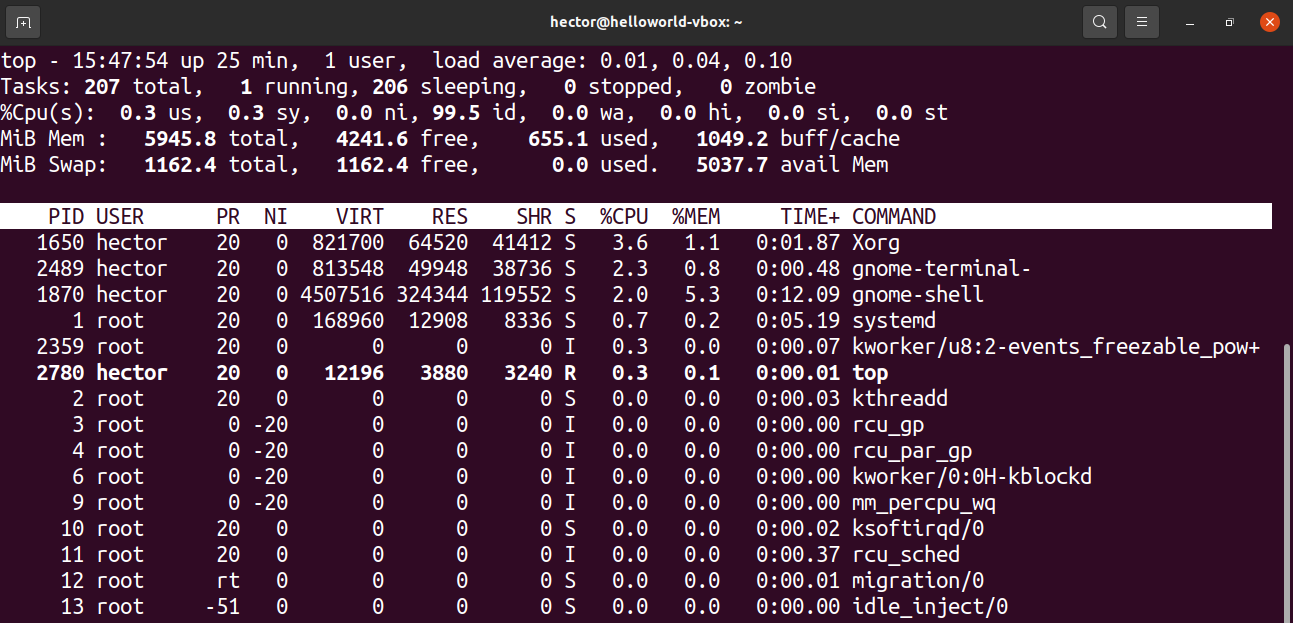

To take full advantage of the memory installed, one has to go into the BIOS and set the X.M.P. This setting is by default at the lowest common denominator for what memory the mainboard supports. There is a memory setting in modern BIOS' called X.M.P. This turns out to be more of a hardware problem than a software engineering issue, perhaps. Hyperthreading was not tested beyond the first tests, so no conclusions can be drawn in that area, other than that the default configuration with single channel memory probably starves the hyperthreaded cores for memory accesses. The old rule of as many threads as processors seems to hold. Memory is a major potential bottleneck for this CPU and must be configured properly to take advantage of the processor. Tossing more processing threads at the problem once memory is configured optimally does not seem to help or hinder much. It seems that memory is a huge factor and the bottleneck for this processor. Only a maximum of 24 threads was tested in this case. This is probably a fluke and likely not a definitive point at which a minimum point is reached. After this point, the timings wobbled up and down with another minimum of 6:46.6 at 20 threads. Once dual channel memory was enabled, times decreased to 6:47.5 at 17 threads. With one exception the compile times increased slowly to 10:41 at 32 threads. memory profile, but still with single channel access, the times decreased until a minimum was reached of 10:08.5 at 16 threads as many threads as cores (no HT enabled). Whatever the bottleneck is, is hit after 12 parallel threads.Īfter tuning the X.M.P. The compile time then increased slowly and monotonically out to the last run of make -j24. Just by disabling the hyperthreading feature.Ĭompile time actually decreased monotonically, but not linearly, until make -j12, taking 12:46 with a dozen processes. That is an over 20% reduction in compile time amounting to a difference of 3 minutes 41 seconds, from 17:25 to 13:44.

Source: Linux kernel, 4.4.176, compiled with.This question touches on the topic of benchmarking by compile time comparison, but does not explore the (lack of) variability in build times seen. Or is this a case of synthetic benchmarks vs. What would be the bottleneck to change or fix? The Ryzen machine has all the latest hardware gadgets. In fact, sometimes meager improvements were seen. To put it simply, CPUs that scored multiple times faster at most certainly did not decrease compile times by the same factor. Details below including compile times for the Linux 4.4.176 kernel using Ubuntu 18.04. The newer CPUs received much higher benchmark numbers from. Over the past few months I have seen some different machines all running Linux with CPUs that varied from about 9 years since release and some recently released. Why does disabling hyperthreading affect performance significantly with the Ryzen CPU?.HDD) and CPU benchmarks vary significantly in performance? Why do the compile times not vary significantly between different era CPUs, even though disk (NVMe vs.

0 kommentar(er)

0 kommentar(er)